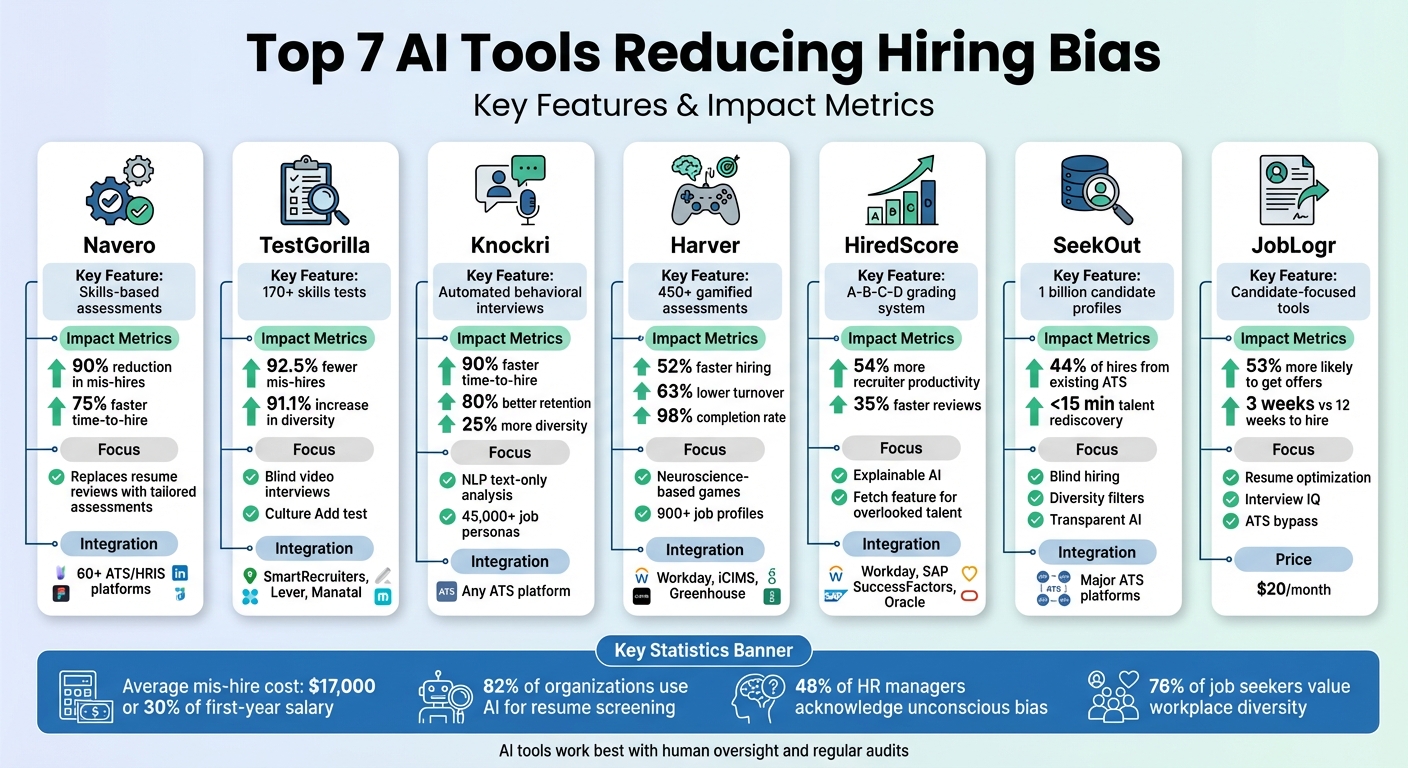

Top 7 AI Tools Reducing Hiring Bias

Hiring bias can lead to costly mistakes, like mis-hires averaging $17,000 or up to 30% of a new hire's first-year salary. AI tools are designed to counteract biases such as affinity bias, confirmation bias, and the halo effect, which often distort hiring decisions. These tools anonymize candidate data, focus on skills over credentials, and use standardized evaluations to ensure fairer hiring outcomes.

Here’s a quick look at seven AI tools tackling hiring bias:

- Navero: Replaces subjective resume reviews with skills-based assessments, reducing mis-hires by 90%.

- TestGorilla: Offers over 170 tests for hard and soft skills, with features like blind video interview evaluations.

- Knockri: Automates structured behavioral interviews, focusing on skills and avoiding biased historical data.

- Harver: Uses gamified assessments and neuroscience-based tools to evaluate potential, not backgrounds.

- HiredScore: Grades candidates using an explainable, auditable system while surfacing overlooked talent.

- SeekOut: Provides tools like blind hiring and diversity filters to promote equitable hiring practices.

- JobLogr: Focuses on job seekers, helping them optimize resumes and prepare for interviews.

These tools streamline hiring, reduce unconscious bias, and help organizations build diverse, high-performing teams.

7 AI Tools Reducing Hiring Bias: Features and Benefits Comparison

Can AI Reduce Bias? Insights from Torin Ellis on HR’s Biggest Dilemma

1. Navero

Navero transforms the hiring process by replacing subjective resume reviews with tailored assessments that measure candidates' actual skills. By using standardized evaluations, it claims to cut the time-to-hire by 75% and reduce mis-hires by 90%. Let’s take a closer look at what makes Navero stand out.

Skills-Based Assessments

Navero’s assessments are laser-focused on the specific skills required for each role. Instead of relying on generalized tests, the platform creates custom evaluations designed to verify practical competence. Over time, its proprietary data system refines and improves matching accuracy, making the process even more precise.

Bias Reduction Mechanisms

To ensure fairness, Navero removes personal identifiers from assessments and employs multiple anti-cheating tools like plagiarism detection, identity verification, webcam monitoring, and screen tracking. These measures help create unbiased evaluations, which are key to building a diverse talent pool. Founder Nathan Trousdell emphasizes this mission:

"AI must improve - not replace - human decision-making in hiring"

ATS Integration

Navero seamlessly integrates with over 60 ATS and HRIS platforms, making it easy to incorporate into existing hiring workflows. It’s particularly designed for small to mid-sized businesses, offering advanced hiring tools without the complexity of large enterprise systems.

2. TestGorilla

TestGorilla takes a fresh approach to hiring by replacing traditional resume screening with objective talent assessments. With a library of over 170 tests, the platform evaluates hard skills, soft skills, and personality traits. This ensures candidates are assessed based on their actual abilities rather than their educational background or personal connections. By focusing on skills-based evaluations, TestGorilla aligns with the growing demand for fairer and more inclusive hiring practices. Leveraging AI, the platform minimizes bias and provides a wide range of tools to refine the hiring process.

Skills-Based Assessments

Instead of relying on degrees or job titles, TestGorilla emphasizes job-relevant skills. Its extensive test library includes areas like IT business analysis, cognitive abilities, and personality traits. Research backs this approach: studies show that "years of experience" correlates poorly with job performance (r≈0.06), highlighting the importance of skills-focused hiring. Companies using TestGorilla have reported impressive outcomes - 92.5% experienced fewer mis-hires, and 91.1% noted an increase in workforce diversity.

Bias Reduction Mechanisms

TestGorilla incorporates several features to reduce bias in the hiring process. For instance, its AI-powered video interviews evaluate candidates based solely on their speech transcripts, ignoring visual cues like facial expressions, appearance, or accents. Additionally, personal identifiers such as names, photos, pronouns, and locations are removed before evaluations begin.

One standout feature is the "Culture Add" test, which moves away from the traditional "culture fit" concept. Instead of seeking candidates who mirror existing employees - a practice often rooted in affinity bias - this test identifies individuals who align with company values while bringing fresh perspectives. For example, digital agency TakeFortyTwo replaced subjective questionnaires with TestGorilla's Culture Add test, creating a hiring process that prioritizes values and diversity.

Amer Odeh, Senior Measurement Scientist at TestGorilla, highlights the reliability of AI in evaluations:

"Human scoring can swing unpredictably depending on mood, fatigue, or even the time of day. Our AI scoring tool, by contrast, never has an 'off' day. It delivers steady, reliable evaluations for every applicant, no matter when or where."

ATS Integration

TestGorilla goes beyond assessments by integrating seamlessly with major Applicant Tracking Systems (ATS) like SmartRecruiters, Lever, and Manatal. This integration simplifies the hiring process by automating workflows and centralizing data. Companies can easily transition from resume screening to skills testing without juggling multiple platforms. Notably, even the free plan includes AI resume scoring, making these tools accessible to businesses of all sizes.

3. Knockri

Knockri addresses hiring bias by automating structured behavioral interviews (SBIs), ensuring all candidates answer the same set of questions. The platform focuses on objective, skill-based evaluations to promote fair hiring practices. Using Natural Language Processing (NLP), Knockri analyzes only the text transcript of candidate responses, deliberately ignoring visual elements like appearance, facial expressions, and accents. By focusing solely on the content and quality of responses, Knockri ensures evaluations remain unbiased, reinforcing the importance of precise AI tools in recruitment.

Skills-Based Assessments

Knockri offers access to over 45,000 job personas validated by I/O psychologists, measuring critical skills like collaboration and problem-solving. Research shows that structured behavioral interviews are 16 times more predictive of job performance than reviewing prior experience and twice as predictive as personality tests or unstructured interviews. Companies using Knockri have reported a 90% reduction in time-to-hire and an 80% improvement in employee retention.

Bias Reduction Mechanisms

Unlike many AI hiring tools, Knockri avoids using historical hiring data, which often contains ingrained human biases. Instead, the platform relies on scientifically validated, objective data to predict job performance. A bias filter allows recruiters to review results by candidate ID, minimizing unconscious bias. This approach has led to a 25% increase in workforce diversity and makes Knockri's assessments four times more effective than traditional methods.

Robert Gibby, PhD, SIOP Fellow and Director of People Analytics at Facebook, has praised Knockri's approach:

"We trust Knockri's ability to combine the right mix of science and technology to create inclusive assessment experiences with results predictive of success."

ATS Integration

Knockri integrates smoothly with any Applicant Tracking System (ATS), allowing recruiters to create assessments directly in the Knockri dashboard and link them to job postings. The platform supports bulk invitations for candidates via existing ATS platforms and enables easy data sharing. With over 1 million assessments completed, Knockri has earned a 4.8/5 candidate experience rating and a 9.2/10 average recruiter Net Promoter Score. This seamless integration helps recruiters maintain their existing workflows while enhancing hiring efficiency.

4. Harver

Harver shifts the focus from subjective resume reviews to objective, data-driven assessments. With over 450 assessment options, the platform offers tools like cognitive ability tests, behavioral games, job knowledge evaluations, and realistic job previews to evaluate candidates effectively. After acquiring pymetrics, Harver incorporated neuroscience-based nonverbal games to assess soft skills, prioritizing a candidate's potential rather than their background. These tools form the backbone of Harver's approach to comprehensive candidate assessments.

Skills-Based Assessments

Harver provides 900+ pre-built job profiles designed to match candidates with multiple open roles. Its gamified behavioral assessments boast an impressive 98% completion rate, far surpassing traditional, text-heavy methods. Regina Green, Director of Human Resources at Valvoline, highlighted the platform's impact:

"With Harver, we can take a scientific approach to hiring. Now we spend less time recruiting and more time developing talent".

Companies using Harver have seen a 52% reduction in time to hire and a 63% drop in employee turnover.

Bias Reduction Mechanisms

Harver's AI tools are designed to reduce bias in hiring by identifying and removing potentially discriminatory questions. The platform rigorously tests multiple algorithms and implements the least biased version, ensuring compliance with the 4/5ths rule. Importantly, demographic data is excluded from AI models during decision-making and is only used post-deployment to monitor and address bias. Paramount, for instance, experienced a 254% increase in ethnic diversity for an entry-level sales role by leveraging Harver's debiasing features. Additionally, Harver achieves a 97% candidate satisfaction rate while promoting fairer hiring outcomes.

ATS Integration

Harver integrates seamlessly with major Applicant Tracking Systems (ATS) like Workday, iCIMS, and Greenhouse, enhancing existing workflows with its intelligence layer. Sarah Jackson, VP of Global Talent Acquisition at Sage, shared her experience with Harver's pymetrics assessments in their global Early Careers programs:

"With pymetrics, we can streamline the process, ensure inclusivity, and ensure a fair and engaging assessment of all candidates".

Rather than replacing human judgment, Harver serves as a decision-support tool, providing recruiters with objective, science-based insights while keeping them at the center of the hiring process.

5. HiredScore

HiredScore uses a compliance-focused "smart mirror" system to evaluate candidates on a simple A, B, C, D scale, based on how well their submitted details align with job requirements defined by employers. The system strictly analyzes candidate-provided data, ensuring that external biases don’t influence the process.

Bias Reduction Mechanisms

HiredScore’s AI stands out for being explainable and auditable, offering transparency by showing recruiters exactly why a candidate received a particular grade. It avoids the pitfalls of "black box" AI, where decision-making processes are often opaque. An independent bias audit conducted in September 2024 assessed HiredScore's Spotlight AI tool using data from 10 global enterprise clients over a year (September 2023 to September 2024). The audit found no evidence of disparate impact across race, ethnicity, or gender categories, applying the 4/5th rule. This rule flags bias if one group’s selection rate drops below 80% of the top group’s rate.

Rachel Meyers from HR Tech Space highlighted this transparency, saying:

"HiredScore is an auditable, ethical, and explainable AI in contrast to black-box AI, where it is not possible to explain how a decision is made".

AI-generated grades are recommendations, allowing recruiters to retain full decision-making authority. This clear and transparent approach underpins the platform’s diversity-oriented features.

Diversity-Focused Features

HiredScore enhances diversity efforts by leveraging its Fetch feature, which identifies overlooked candidates from prior applicant pools. This minimizes dependence on external sourcing, a process that can inadvertently introduce bias. Companies using HiredScore have reported a 54% increase in recruiter productivity within 10 months and 35% faster hiring manager reviews. Diversity insights are seamlessly integrated into recruiters’ workflows, enabling real-time adjustments to sourcing strategies instead of relying on after-the-fact analysis.

Additionally, HiredScore surfaces candidates from existing talent pools, achieving 70% role coverage. This helps organizations discover diverse talent already within their reach, reducing the need for external searches.

ATS Integration

HiredScore integrates seamlessly with major ATS platforms like Workday, SAP SuccessFactors, and Oracle, extending its unbiased grading and diversity tools into everyday hiring workflows. Workday’s acquisition of HiredScore further embeds the platform into its HR ecosystem. Through this integration, the Fetch feature scans existing ATS databases for qualified candidates who applied for different roles, cutting sourcing costs while broadening diversity. Workday describes the system as:

"The technology acts like a 'smart mirror' reflecting back the extent to which candidates fit the job requirements set by the employer".

Recruiters, who typically spend up to 40% of their time on manual screening, benefit from HiredScore’s automation. The platform not only saves time but also ensures compliance with regulations like GDPR, EEOC, and NYC Local Law 144.

sbb-itb-6487feb

6. SeekOut

SeekOut addresses hiring bias by focusing on skills-based evaluations and offering tools that promote transparency, ensuring candidates are assessed on their qualifications. With access to over 1 billion candidate profiles, the platform stands out for its commitment to fair hiring practices, as demonstrated by completing a third-party AI bias audit in September 2025. By combining detailed profiling with clear evaluation processes, SeekOut offers a unique approach to inclusive hiring.

Bias Reduction Mechanisms

SeekOut’s Bias Reducer feature facilitates blind hiring by hiding candidate identifiers during initial screenings. This ensures recruiters focus solely on skills and experience. Beyond concealing identifiers, the tool links candidate evaluations to specific, role-based rubrics. It also employs AI-driven scorecards to match candidates with custom Ideal Candidate Profiles, prioritizing genuine qualifications over simple keyword matches. The platform operates as transparent AI, allowing recruiters to understand the reasoning behind each candidate's score, aligning with its mission to minimize bias in hiring.

AI also plays a role in candidate discovery, but final decisions remain with recruiters to ensure compliance with EEOC and OFCCP guidelines. SeekOut’s Responsible AI Principles emphasize this commitment:

"SeekOut builds AI capabilities that advance inclusion of underrepresented populations and reduces economic, social, gender and other inequalities".

Diversity-Focused Features

In addition to reducing bias, SeekOut helps organizations enhance workforce diversity through advanced filtering tools. Its Diversity Filters enable recruiters to identify talent from underrepresented groups with just one click, leveraging sophisticated search algorithms. Unlike self-reported data, these algorithms infer diversity characteristics with high accuracy. Recruiters can also track diversity metrics - such as race, gender, and employment history - across talent pools to set realistic DEIB (Diversity, Equity, Inclusion, and Belonging) goals based on actual market trends. This is crucial, as 76% of job seekers consider workplace diversity an important factor when choosing companies and job offers.

SeekOut also supports internal mobility and retention by identifying underrepresented employees for leadership opportunities. Mindy Fineout, Head of Recruiting at Discord, shared her experience:

"Spot exceeded our expectations for the speed with which they have delivered high-quality candidates. The agentic AI technology allows our talent team to focus on understanding role needs and closing the high-quality prospects provided".

ATS Integration

SeekOut integrates seamlessly with major ATS platforms. This two-way integration allows recruiters to manage diverse talent pools within a single interface. Its Talent Rediscovery feature scans previous applicants in the ATS - often in under 15 minutes - to identify qualified candidates, streamlining the process. This is especially impactful given that 44% of successful hires are often already within a company’s existing ATS.

7. JobLogr

JobLogr stands out by focusing on the job seeker, unlike many AI tools designed to assist employers in reducing bias. At $20 per month, this platform helps candidates overcome recruitment hurdles, especially those caused by Applicant Tracking Systems (ATS). These systems often filter out qualified applicants due to strict keyword matching or unconventional career paths, leaving many talented individuals overlooked.

Bypassing ATS Challenges

JobLogr uses AI to tailor resumes, highlighting relevant skills and experiences while providing feedback through its Resume Analyzer. This tool ensures that candidates with non-traditional backgrounds - like career changers or those returning to the workforce - aren't unfairly dismissed by ATS filters. Considering that 90% of employers use ATS to rank applicants, this feature can be a game-changer.

Another standout feature is the voice-to-resume builder. It enables users to verbally share their experiences, turning their input into professional, ATS-friendly resumes. This approach makes resume creation more accessible for individuals who may struggle with traditional formats.

The results speak for themselves: candidates using AI tools like JobLogr are 53% more likely to land job offers and apply to 41% more positions on average. JobLogr users, in particular, have seen hiring times drop dramatically, securing offers in as little as three weeks compared to the usual 12-week timeline - a nearly threefold improvement.

Leveling the Playing Field

Beyond resumes, JobLogr supports candidates during the interview process. Its Interview IQ tool generates tailored questions and talking points based on the job description and resume, helping users feel prepared and confident. This reduces anxiety and mitigates subjective bias during interviews. Alisa Hill, Director of Business Strategy and Operations, highlighted the tool's practicality:

"provides genuine insight into potential interview questions tailored to your resume and job description"

Cover letters are another area where JobLogr shines. AI-generated cover letters have been shown to increase interview requests by 50%, giving candidates a polished, professional edge.

Jenny Foss, Career Coach and Founder of JobJenny, praised the platform, saying:

"It's rare to find a tool that makes job searching this survivable"

JobLogr also includes a free application tracking module and offers a 7-day premium trial, allowing users to explore its full range of features. By centering its tools on the candidate's experience, JobLogr provides a meaningful way to address biases and improve outcomes in the hiring process.

What to Look for in AI Hiring Tools

When tackling the challenges of AI-powered hiring, it’s crucial to choose tools designed to actively reduce bias. The most effective tools stand out because they do more than just automate - they aim to create a fairer hiring process.

Blind resume screening is a must-have feature. The tool should automatically strip away identifying details like names, photos, pronouns, graduation dates, and addresses. Why? Research shows that removing these identifiers helps evaluators focus solely on skills and experience, leading to more equitable outcomes. A well-designed AI tool ensures these potential bias triggers are completely removed, keeping the process fair and objective.

Equally important are explainable AI outputs. It’s not enough for a system to rank or score candidates - you need to understand why those decisions were made. As Buddhi Jayatilleke puts it:

"Bias in the AI hiring process often looks like efficiency until you measure outcomes by group".

Look for tools that provide clear, easy-to-understand explanations for their decisions, rather than relying on opaque, black-box algorithms.

To further ensure fairness, tools should support structured interview formats and skills-based assessments. Standardized interviews, where every candidate answers the same job-relevant questions, eliminate subjective evaluations. Similarly, validated skills tests shift the focus away from pedigree indicators like university names and onto actual capabilities. Considering that 48% of HR managers acknowledge unconscious biases in their hiring processes, these features are critical safeguards.

Lastly, prioritize tools with ATS compatibility and diversity metrics tracking. Integration with your current Applicant Tracking System ensures these bias-reduction features work seamlessly within your workflow. Meanwhile, real-time dashboards that track demographic data - like pass-through rates - allow you to identify where diverse candidates may be dropping off. With 82% of organizations already using AI to review resumes, only those actively monitoring outcomes can ensure their tools deliver fairness alongside efficiency.

These features form the foundation for using AI hiring tools responsibly and effectively.

How to Implement AI Hiring Tools

Introducing AI hiring tools requires a thoughtful approach to ensure fairness and accountability. Start by conducting quarterly audits to check for representation gaps in training data and identify proxy variables like zip codes or university names that could unintentionally influence outcomes. Use flip tests - for example, swapping names like "Emily" with "Jamal" - to confirm that the AI scores candidates consistently across different demographics. These steps are essential for building the transparency needed to meet compliance standards.

Transparency plays a key role in regulatory compliance. For instance, under GDPR, candidates are entitled to a "right to explanation" for automated decisions. Tools like SHAP and LIME can help by providing insights into why specific rankings or decisions were made. For EEOC compliance, apply the four-fifths rule, which ensures that the selection rate for any protected group is at least 80% of the rate for the highest-performing group. A case study from July 2025 highlights this: Eightfold AI tested their Match Score system against GPT-4o using 10,000 anonymized candidate–job pairs. Their domain-specific model achieved a race Impact Ratio of 0.957, while GPT-4o fell to 0.774, failing to meet the 0.80 threshold for fairness.

Human oversight is essential at every stage of the hiring process. AI should serve as an advisory tool, not the final decision-maker. Keep a log of instances where recruiters override AI recommendations - these override rates can expose both weaknesses in the algorithm and potential human biases. In fact, organizations combining AI with human oversight have reported a 45% drop in biased decisions. It's equally important to train HR teams on how the algorithms work, enabling them to recognize anomalies and make informed decisions about when to trust or challenge the system’s outputs.

To measure progress, monitor key metrics like funnel pass-through rates (e.g., Applied → Screened → Interviewed → Offered → Hired) to pinpoint where diverse candidates might be dropping off. Track time-to-first-decision to ensure no group experiences unnecessary delays, and review 90-day retention rates to assess the quality of hires. For example, in July 2025, V2Solutions reported that an HR tech company implementing fairness controls saw 80,000 new sign-ups and a 50% increase in candidate engagement within a year, directly linked to improved transparency.

Finally, document each AI system thoroughly using model cards. These should outline data sources, exclusions, fairness checks, and known limitations. Such documentation is invaluable during audits and helps your team clearly understand the tool’s capabilities and constraints.

What's Next for AI in Fair Hiring

AI is now capable of real-time bias detection, constantly monitoring interviews and screening processes to flag bias patterns as they emerge. For instance, H&M Group introduced an "Ethical AI Debate Club" in 2025, where diverse teams tackle ethical dilemmas in realistic hiring scenarios to prevent "fairness drift". Similarly, Microsoft launched "Responsible AI Champs", a program where domain experts within business units identify ethical concerns and challenge assumptions during the rollout of new tools. This kind of immediate feedback is helping companies develop smarter, more forward-thinking hiring strategies.

AI is also reshaping workforce planning. By analyzing market trends and identifying skills gaps, it enables a shift from reactive to proactive hiring. By 2026, only 37% of employers are expected to rely on credentials, as "skills-first" hiring becomes the norm. This approach minimizes reliance on gut instincts, which often lead to bias, especially during rushed hiring cycles. Already, nearly 90% of companies are using some form of AI in their recruitment efforts.

"There shouldn't be a dichotomy between choosing accuracy and fairness in hiring: a well-designed algorithm can achieve both." – Eitan Anzenberg et al., Eightfold.ai

AI is also driving hyper-personalization in hiring. By tailoring the application process to individual candidates and roles, it reduces barriers for underrepresented groups. Autonomous AI agents now handle tasks like sourcing and scheduling, freeing up about eight hours per week for recruiters. These advancements not only improve the candidate experience but also make fair hiring tools more accessible by lowering costs.

Affordability is another area where AI is making strides. Platforms like Navero provide enterprise-grade fairness tools at reduced costs, making them available to smaller companies. Domain-specific models are achieving near-equal bias impact ratios, with fairness maintained through regular 90-day reviews. As Nathan Trousdell, Founder & CEO of Navero, explains:

"AI must improve - not replace - human decision making in hiring"

The focus is on treating fairness as a continuous process that enhances hiring decisions, rather than a one-time software adjustment. AI is proving to be a powerful ally in creating a more equitable hiring landscape.

Conclusion

Hiring bias isn't just an ethical dilemma - it’s a business challenge that can limit access to top talent. The seven AI tools discussed here aim to create a fairer hiring process through features like blind screening, skills-based assessments, and real-time bias detection. When used effectively, these tools can help you build diverse teams that outperform less varied ones, all while cutting time-to-hire by as much as 75%.

AI works best as a partner in hiring decisions, not as the sole decision-maker. As Sandra Wachter, Professor at the University of Oxford, explains:

"Having AI that is unbiased and fair is not only the ethical and legally necessary thing to do, it is also something that makes a company more profitable."

To achieve this, it’s crucial to maintain human oversight, perform regular audits, and use explainable scoring systems to uncover and address hidden biases before they affect candidates. This approach lays the groundwork for effective, bias-aware hiring practices.

Start by identifying your biggest hiring bottleneck - whether it’s screening resumes, standardizing interviews, or evaluating skills. Use blind screening to hide personal details, implement structured interviews to ensure consistency, and track pass-through rates at every stage of your hiring process.

For job seekers, platforms like JobLogr offer tools to optimize resumes, craft cover letters, and prepare for interviews, helping candidates navigate both human and algorithmic biases. With the growing emphasis on skills-first hiring, what you can do matters more than where you’ve worked or studied.

Creating a fair hiring process requires ongoing effort. The evidence is clear: these tools can make a difference. It’s time to put them into action.

FAQs

Can AI hiring tools still be biased?

AI hiring tools, while advanced, can still reflect bias. This often stems from biased training data, flawed algorithms, or even the way results are presented. If these factors aren’t addressed properly, they can result in unfair screening practices or even discrimination during the recruitment process.

What should I audit to prove fair hiring?

To ensure fair hiring practices when using AI tools, it’s crucial to focus on a few key areas:

- Algorithmic bias: Evaluate the system for any unequal impacts across different demographic groups. This helps identify and address potential discrimination.

- Training data: Review the data used to train the AI to confirm it represents a diverse and balanced population. This step minimizes the risk of skewed results.

- Decision-making process: Maintain transparency by documenting how decisions are made and holding the process accountable.

It’s also essential to regularly test the AI's outputs, monitor candidate outcomes, and keep detailed records of decisions. These actions make it easier to spot and correct bias, ensuring compliance and promoting fair hiring practices over time.

How can job seekers reduce ATS rejections with JobLogr?

Job seekers can sidestep ATS (Applicant Tracking System) rejections by leveraging JobLogr’s AI-driven tools to fine-tune their resumes and application materials. With features like resume analysis and customized tailoring, users can remove biased language and ensure their documents meet ATS-friendly formatting standards.

On top of that, JobLogr offers tools for personalized cover letter creation and interview preparation, helping candidates craft polished, relevant applications that align with ATS requirements. These tools can boost their chances of moving forward in the hiring process.